Architectural Design of an Experimental Platform for Autonomous Driving

Renderings and Drawings of Design for Research Project

Collaboration with Dr. Eugene Vorobeychik, Funded by Office of the Vice Chancellor of Research, Interdisciplinary Research Seed Grant, Washington University in St. Louis, McKelvey Hall, St. Louis, MO, 2019-Present

Architectural Design of Experimental Platform for Autonomous Driving

Autonomous driving has, in the last two decades, advanced from fundamental research to reality, with autonomous cars already on the road in Arizona and California, even if restricted to relatively small geographical areas. Autonomous cars offer a tremendous promise, with benefits ranging from reducing greenhouse emissions (due to the use of electric car platforms) to reducing the number of driving accidents and deaths.[1] However, in many ways, autonomous driving is still in its early stages, with safety remaining relatively unproven and spatial implementation untested. In particular, while it is clear that modern technology is already sufficiently capable of handling typical driving scenarios, it is far from clear how vulnerable the state-of-the-art algorithmic techniques are to unusual scenarios which, while rare, are too common to ignore (the so-called heavy tail of the scenario distribution). Moreover, recent research findings have demonstrated a host of potential vulnerabilities in the modern perceptual architecture in autonomous vehicles,[2],[3],[4] such as the ability of malicious parties to mislead stop sign detection techniques and cause stop signs to be mistaken for speed limit signs.[5] Driverless cars present opportuities to address the problems of mobility in the built environment, including environmental degredation, inequities in access to transit, and economic injustice and segregation in cities due to the expansion of highways.[6],[7],[8] Despite AVs potential, their widespread implementation could just as easily exacerbate these problems.

A fundamental limitation today is that most such studies are done in very specific, limited scenarios or fully digital simulations; thus, the vulnerability of modern autonomous driving techniques in physical driving settings is still poorly understood. The path forward is through multi-faceted experimental research that merges digital, simulation-based analysis with physical evaluations. However, the typical physical research methodology in autonomous driving involves actual autonomous cars and large-scale, expensive test facilities. The cost of these environments significantly limits the scope of experimentation, and, consequently, increases the time scale required to build confidence in the safety of autonomous vehicles as they are deployed in practice.

The core innovation of the proposed project is to develop a miniature physical autonomous driving platform to enable intermediate-scale physical experimentation, combining knowledge from computer science and architecture. From an engineering perspective, this scale platform will enable the research team to evaluate the limits of autonomy at significantly lower costs than full-scale experimental facilities. It will also allow for a far greater scope of stress-testing of modern autonomous driving approaches, for example, designing the tests to be explicitly adversarial (e.g., causing the cars to malfunction). From an architectural perspective, the model allows the team to examine how AVs can be integrated into cities in ways that improve public health and safety, encourage equitable access to public transit, and support climate resilience.

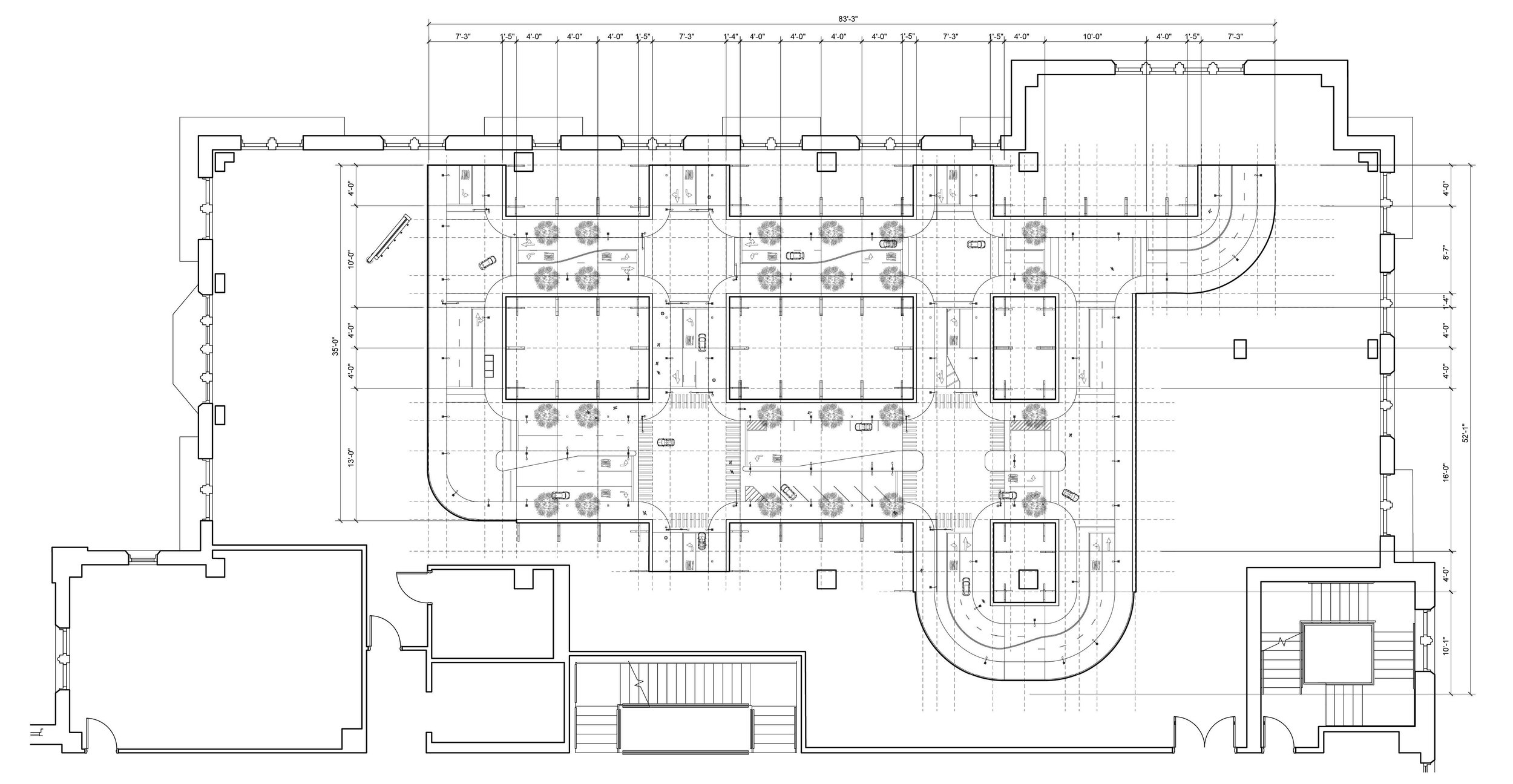

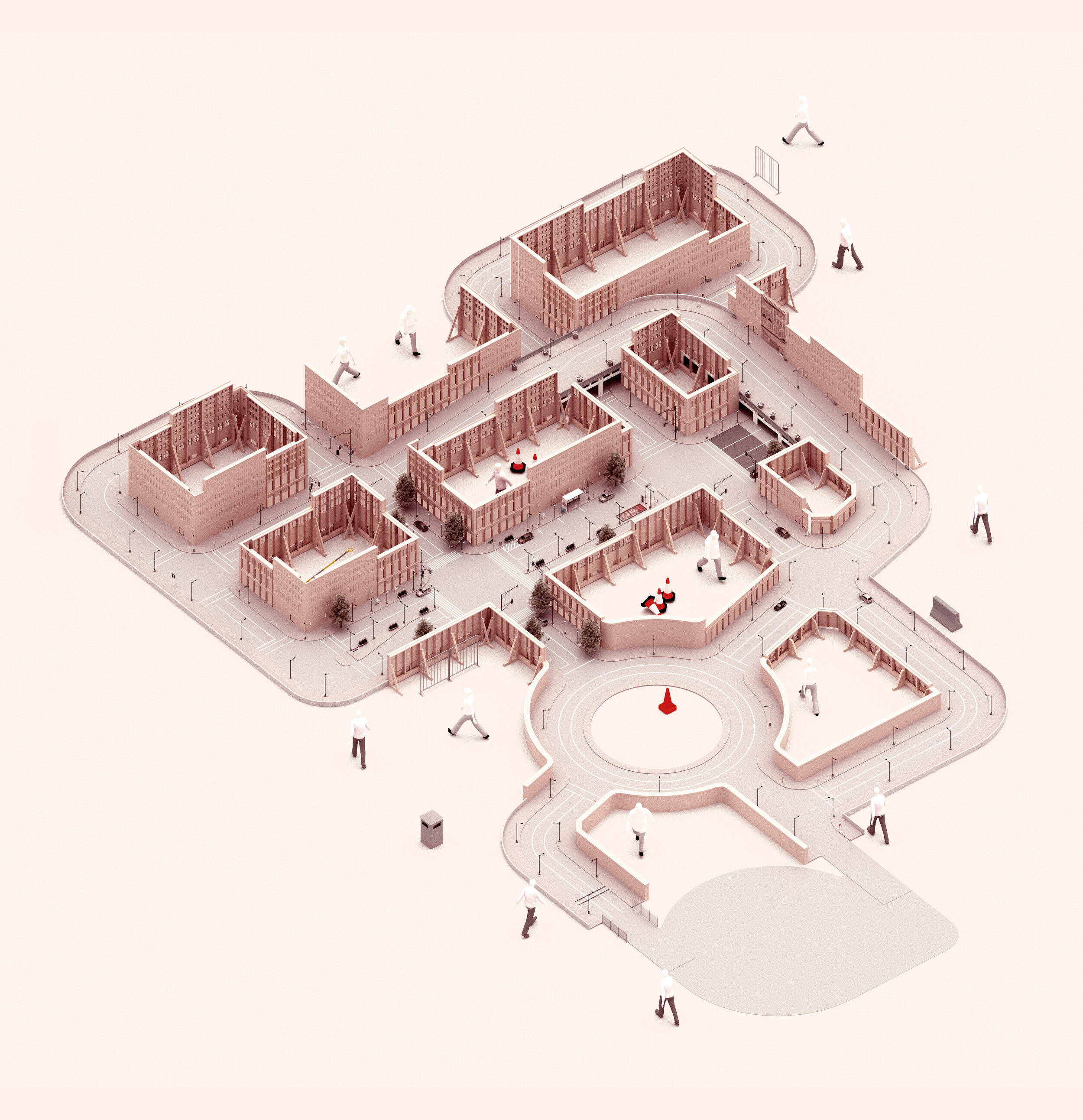

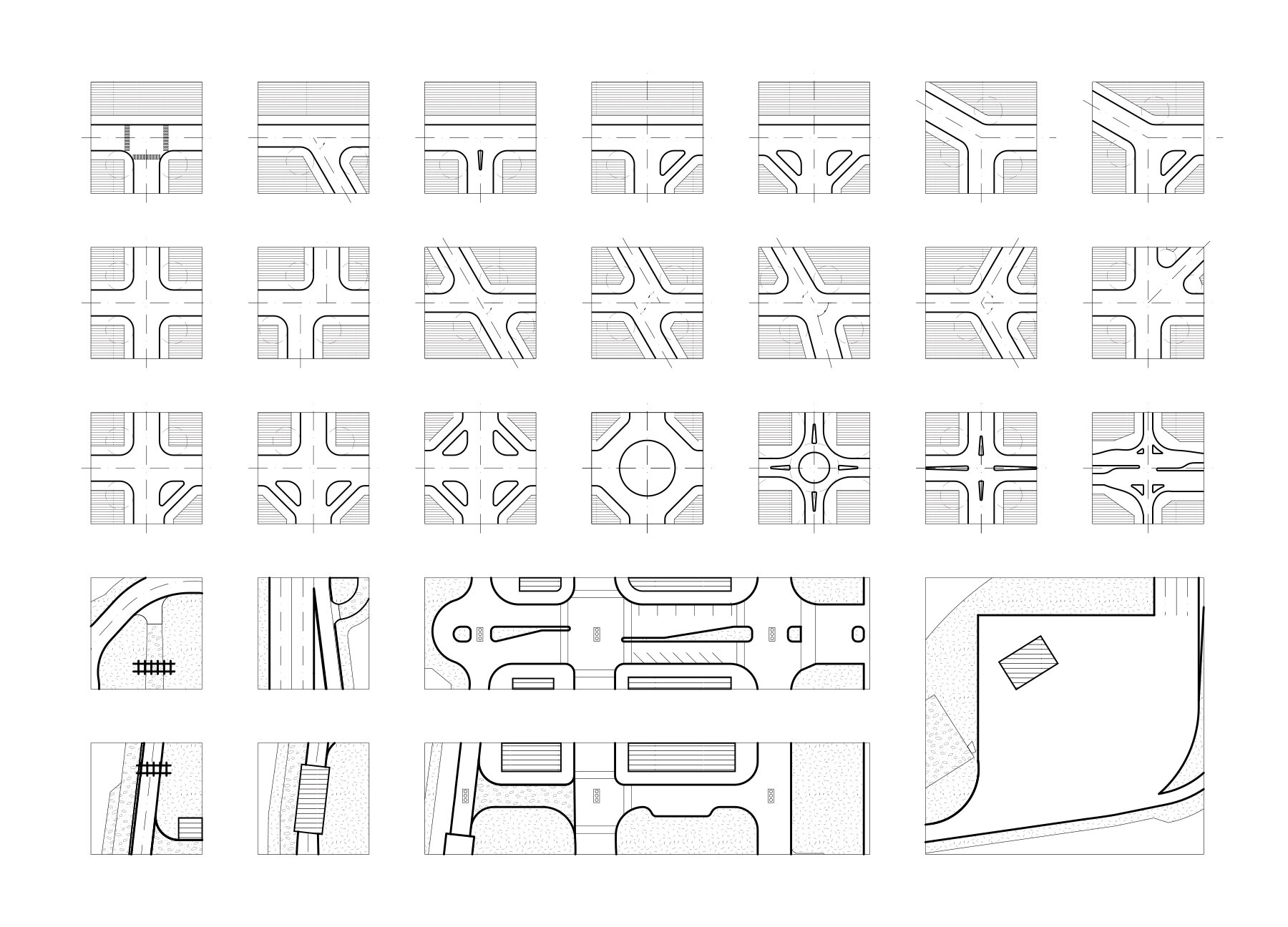

In order to pursue this research, the research team is building a 1:8 scale environment in which to perform tests using an F1/10 model autonomous vehicle of the same scale.[9] The F1/10 autonomous vehicle model is a miniature autonomous car platform which is currently largely in use in autonomous car racing competitions. However, the fully autonomous capabilities, which include the full perceptual stack (capable of mounting lidar, radar, and camera), provides for realistic physical experimentation with modern self-driving software platforms, such as Apollo[10] and Autoware.[11] Our goal is to evaluate vulnerabilities of such platforms by executing them in a model city and targeting the F1/10 cars, all in controlled conditions, but within a realistic model of an urban driving environment. As an example, we can experiment with the consequence of lidar spoofing attacks, attacks on automated localization, and camera-based attacks on street signs, traffic lights, and roads, evaluating the extent to which these can cause erroneous driving and even crashes.

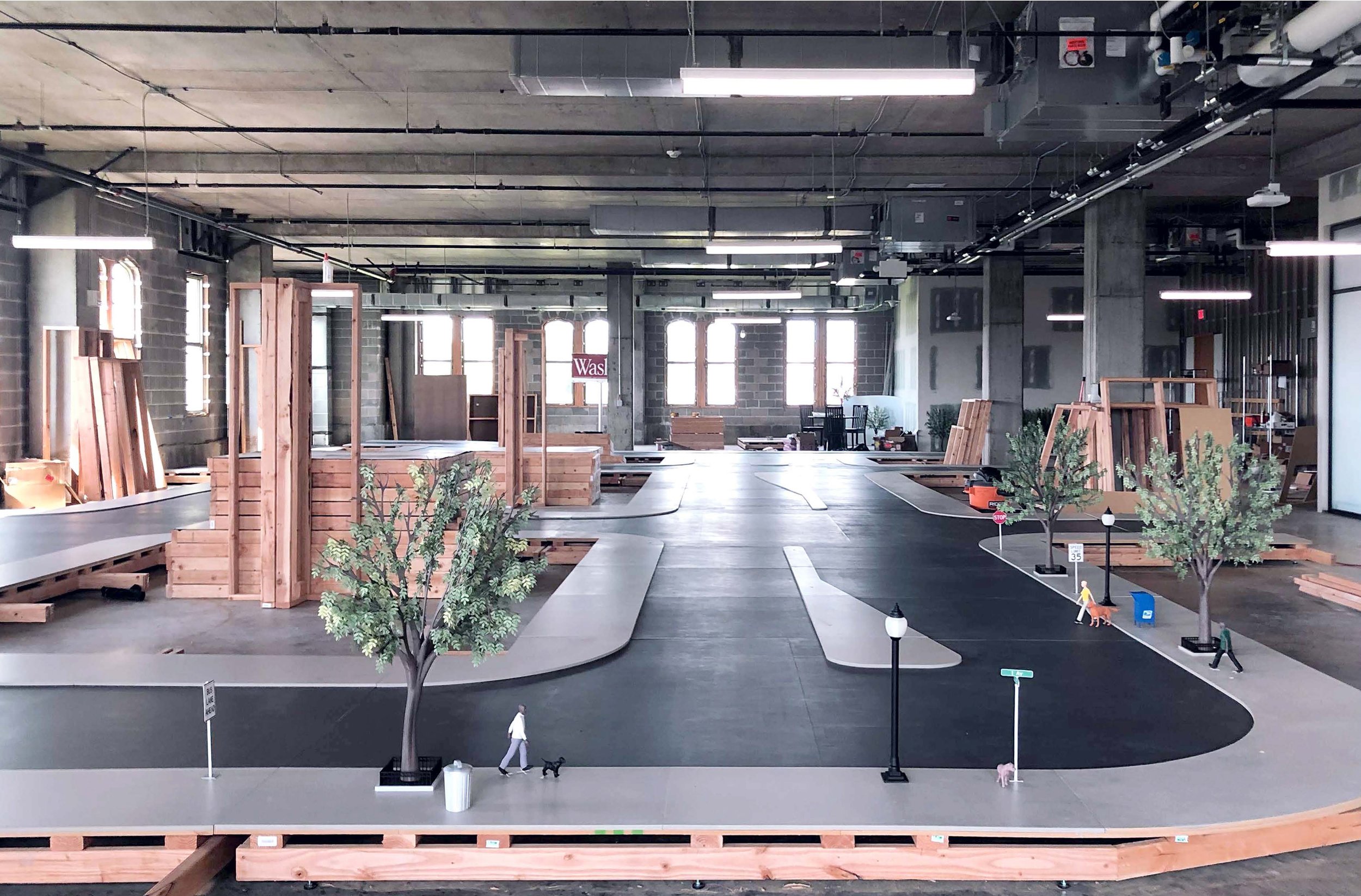

The model city is under construction in McKelvey Hall at Washington University in St. Louis, is approximately 90’ by 30’, and creates a variety of scenarios for the car to encounter. The model is designed as an immersive environment in which building-form, public space, material characteristics, and atmospheres can be controlled and transformed over time, not unlike a film set. The testing track requires material translations between full and miniature scale to achieve parallel conditions across both. Work is being conducted across software that records real-world environments with software that models digital environments, and compositing digital fabrication tools that shape three-dimensional form with those that apply two-dimensional color and image.

This research project will examine a series of questions, including:

(1) What interfaces might designers use to guide interactions between humans and autonomous agents in ways that increase public safety in cities? Phase 2 research will continue to explore how to reduce the risk of vehicle crash injuries and deaths for pedestrians and drivers alike, but with a focus on safe pedestrian space. In our investigation, the team will examine how to modulate accessways and aperture in urban spaces and building facades shared between humans, AVs, drones, and autobots.[12] We will examine how best to create drone portals and other robotic entryways in facades. We will also study how to supplement or replace traditional traffic signals with surface textures and patterns designed for remote sensing, consequently, increasing the safety of crosswalks, sidewalks, and plazas.

(2) What opportunities does autonomous mobility offer designers to innovate in the built environment in ways that improve public health? A primary focus of our Phase 2 research includes making cities more walkable and promoting healthy habits. Our study will examine how networked traffic information might be used to reduce the scale of roadways, such as shifting the center lane’s priority in response to traffic patterns.[13] Reducing roadway width would afford more room for public space and green space, promoting climate resilience, improved air quality, and healthier cities by increasing shaded areas that encourage healthy transit like walking and biking.

(3) How can cities implement AVs in sustainable ways that support climate resilience? With no labor costs, AV ridesharing is likely to reduce the cost of individual travel. And with no need for engaged driving, riders may work while traveling and increase their commute length. Phase 2 research will examine how to connect AV ridesharing with public transit and bike shares through transit hubs that encourage multi-modal travel.[14] We will study how such hubs may increase travel efficiency and reduce energy consumption, congestion, emissions, and sprawl. We will also analyze how piezoelectric panels might be implemented to collect energy from mobility networks.

(4) How can cities incorporate AVs in ways that encourage equitable access to public transit? Access to public transit is particularly crucial for people with disabilities and older adults who cannot walk or drive and lower-income citizens who cannot own a personal vehicle. We will assess how AVs can best connect public transit to those that live outside of existing bus or train routes. At the same time, reducing the need for individual vehicle ownership will allow for the scale of infrastructure to decrease so that cities can avoid perpetuating and even reverse the destruction of neighborhoods along racial and economic lines.[15]

(5) What environments reveal the most significant limitations in autonomous driving software and sensing? Recent research in adversarial machine learning has exhibited a number of potential vulnerabilities.[16],[17],[18],[19] However, most such explorations are performed on digital datasets [20],[21],[22] or using simulation autonomous driving experiments.[23] Consequently, it is poorly understood whether such attacks are effective in physical urban environments. On the other hand, urban driving introduces additional factors that may accentuate vulnerabilities of AI, such as objects that move in ways that can be difficult to anticipate (cars, pedestrians), traffic congestion, and complex traffic patterns. We will investigate this practical complexity of urban driving in the miniature physical environment, where the stakes are sufficiently low to enable far more extensive exploration, including adversarial experiments that cause vehicles to crash.

(6) How can we design autonomous driving software to be robust to a broad variation in the environments they may encounter? Despite extensive prior research in vulnerabilities of AI, there has been relatively slim progress in understanding how to make AI-based perception robust to perturbations of the physical environment (so-called physical attacks). To design and evaluate robust approaches for AI-based perception, it is crucial to make use of physical experiments, and our proposed miniature experimental environment is the best way to accomplish this without creating unnecessary risks that exist when experiments are done at real scale (i.e., with real cars). Specifically, we will explore adversarial training procedures for increasing robustness, with the core research questions focused on what kinds of physical attack models are sufficient to represent a broad space of realistic physical attacks to enable generalizable defense.

Vale and Vorobeychik are working closely to develop their research, with Vorobeychik leading the autonomous vehicle research and Vale leading research on the built environment, allowing these interdependent aspects of the project to inform one another. As a hybrid of architectural and scientific research, the model is both a framework for advancing technology and contribution to visual culture that can draw public interest in St. Louis and beyond.

Endnotes

[1] Lawrence Burns and Christopher Shulgan. Autonomy: The Quest to Build the Driverless Car—And How It Will Reshape Our World. Ecco, 2018.

[2] N. Carlini and D. Wagner. “Towards Evaluating the Robustness of Neural Networks.” In IEEE Symposium on Security and Privacy, pages 39–57, 2017.

[3] Adith Boloor, Xin He, Christopher Gill, Yevgeniy Vorobeychik, and Xuan Zhang. “Simple physical adversarial examples against end-to-end autonomous driving models.” In IEEE Conference on Em- bedded Software and Systems, 2019.

[4] Ian Goodfellow, Jonathon Shlens, and Christian Szegedy. “Explaining and harnessing adversarial examples.” In International Conference on Learning Representations, 2015.

[5] Kevin Eykholt, Ivan Evtimov, Earlence Fernandes, Bo Li, Amir Rahmati, Chaowei Xiao, Atul Prakash, Tadayoshi Kohno, and Dawn Xiaodong Song. “Robust physical-world attacks on deep learning visual classification.” IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, p. 1625-1634.

[6] Keller Easterling. "Switch." E-flux Architecture, September, 2017. Accessed 1 Nov. 2020. https://www.e-flux.com/architecture/positions/151186/switch/.

[7] Constance Vale. The Autonomous Future of Mobility, November 2, 2020 – March 12, 2021. Mildred Lane Kemper Art Museum, Washington University in St. Louis, St. Louis, MO.

[8] Constance Vale. "The Autonomous Future of Mobility." Expanding the View. ACSA Annual Conference Proceedings, St. Louis. 2021.

[9] Matthew O’Kelly, Houssam Abbas, Jack Harkins, Chris Kao, Yash Vardhan Pant, Rahul Mangharam, Varundev Suresh Babu, Dipshil Agarwal, Madhur Behl, Paolo Burgio, and Marko Bertogna. “F1/10: An Open-Source Autonomous Cyber-Physical Platform.” IEEE International Conference on Cyber-Physical Systems, 2019.

[10] "Apollo." http://apollo.auto/. Accessed 4 Dec. 2019.

[11] "The Autoware Foundation: Open Source for Autonomous..." https://www.autoware.org/. Accessed 4 Dec. 2019.

[12] Andrew Witt. "Feral Autonomies." E-flux Architecture. 2019. Accessed 01 Nov. 2020, https://www.e-flux.com/architecture/software/341087/feral-autonomies/.

[13] Antoine Picon. Smart Cities A Spatialised Intelligence – AD Primer. Wiley, 2015, p. 34-37.

[14] Easterling.

[15] Vale. The Autonomous Future of Mobility.

[16] N. Carlini and D. Wagner.

[17] Boloor, He, Gill, Vorobeychik, and Zhang.

[18] Goodfellow, Shlens, and Szegedy.

[19] Eykholt, Evtimov, Fernandes, Li, Rahmati, Xiao, Prakash, Kohno, and Song.

[20] Chong Xiang, Charles R. Qi, and Bo Li. “Generating 3D adversarial point clouds.” IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.

[21] Chaowei Xiao, Dawei Yang, Jia Deng, Mingyan Liu, and Bo Li. “MeshAdv: Adversarial meshes for visual recognition.” IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.

[22] Yulong Cao, Chaowei Xiao, Benjamin Cyr, Yimeng Zhou, Won Park, Sara Rampazzi, Qi Alfred Chen, Kevin Fu, and Z. Morley Mao. “Adversarial sensor attack on LIDAR-based perception in autonomous driving.” ACM Conference on Computer and Communications Security, 2019.

[23] Boloor, He, Gill, Vorobeychik, and Zhang.